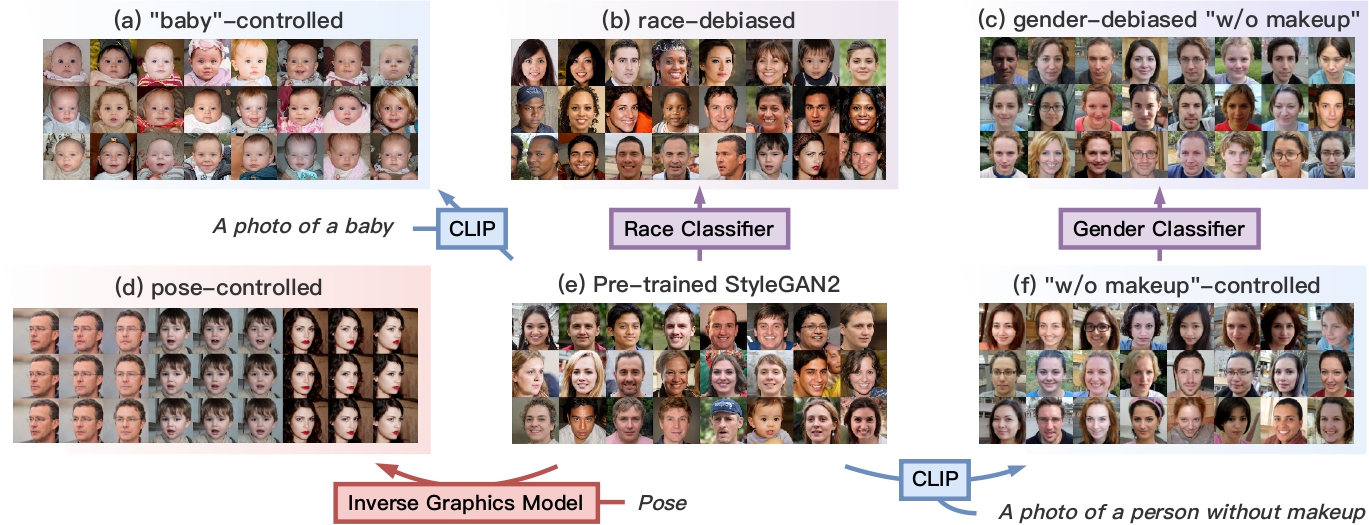

Generative models (e.g., GANs and diffusion models) learn the underlying data distribution in an unsupervised manner. However, many applications of interest require sampling from a particular region of the output space or sampling evenly over a range of characteristics. For efficient sampling in these scenarios, we propose Generative Visual Prompt (PromptGen), a framework for distributional control over pre-trained generative models by incorporating knowledge of other off-the-shelf models. PromptGen defines control as energy-based models (EBMs) and samples images in a feed-forward manner by approximating the EBM with invertible neural networks, which avoids optimization at inference. Our experiments show that PromptGen can efficiently sample from several unconditional generative models (e.g., StyleGAN2, StyleNeRF, diffusion autoencoder, NVAE) in a controlled or/and de-biased manner using various off-the-shelf models: (1) with the CLIP model as control, PromptGen can sample images guided by text, (2) with image classifiers as control, PromptGen can help de-bias generative models across a set of attributes or attribute combinations, and (3) with inverse graphics models as control, PromptGen can sample images of the same identity in different poses. (4) Finally, PromptGen reveals that the CLIP model shows a "reporting bias" when used as control, and PromptGen can further de-bias this controlled distribution in an iterative manner.

text description: Photo of a cat with closed eyes

text description: Photo of a cat with closed eyes

text description: Photo of a British shorthair

text description: Photo of a British shorthair

text description: Photo of a happy person

text description: Photo of a happy person

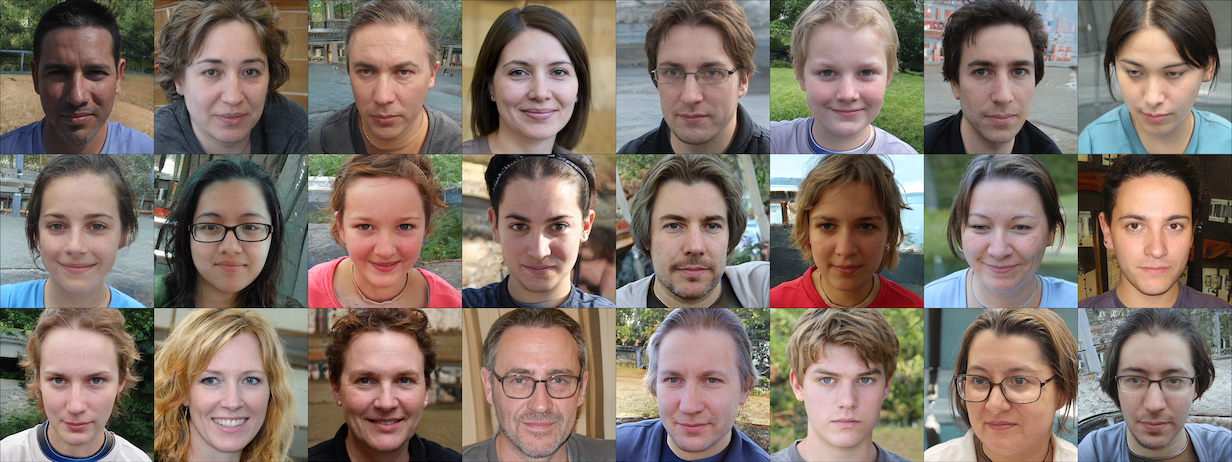

text description: Photo of a person w/o makeup - pre-debiasing

text description: Photo of a person w/o makeup - pre-debiasing

text description: Photo of a person w/o makeup - after-debiasing

text description: Photo of a person w/o makeup - after-debiasing